Publications

Selected Preprints (not peer reviewed)

2025

SFR-DeepResearch: Towards Effective Reinforcement Learning for Autonomously Reasoning Single Agents

Xuan-Phi Nguyen, Shrey Pandit, Revanth Gangi, Austin Xu, Silvio Savarese, Caiming Xiong, and Shafiq Joty. 2025.

PDF BibTex Slides

Xuan-Phi Nguyen, Shrey Pandit, Revanth Gangi, Austin Xu, Silvio Savarese, Caiming Xiong, and Shafiq Joty. 2025.

PDF BibTex Slides

Hard2Verify: A Step-Level Verification Benchmark for Open-Ended Frontier Math

Shrey Pandit, Austin Xu, Xuan-Phi Nguyen, Yifei Ming, Caiming Xiong, and Shafiq Joty. 2025.

PDF BibTex Slides

Shrey Pandit, Austin Xu, Xuan-Phi Nguyen, Yifei Ming, Caiming Xiong, and Shafiq Joty. 2025.

PDF BibTex Slides

J4R: Learning to Judge with Equivalent Initial State Group Relative Policy Optimization

Austin Xu, Yilun Zhou, Xuan-Phi Nguyen, Caiming Xiong, and Shafiq Joty. 2025.

PDF BibTex Slides

Austin Xu, Yilun Zhou, Xuan-Phi Nguyen, Caiming Xiong, and Shafiq Joty. 2025.

PDF BibTex Slides

Published papers (journals, confereces, workshops)

All papers have been subject to peer review unless indicated otherwise. * indicates equal contributions.2026

Foundational Automatic Evaluators: Scaling Multi-Task Generative Evaluator Training for Reasoning-Centric Domains

Austin Xu, Xuan-Phi Nguyen, Yilun Zhou, Chien-Sheng Wu, Caiming Xiong, and Shafiq Joty. In The Fourteenth International Conference on Learning Representations (ICLR-26) 2026.

PDF BibTex Slides

Austin Xu, Xuan-Phi Nguyen, Yilun Zhou, Chien-Sheng Wu, Caiming Xiong, and Shafiq Joty. In The Fourteenth International Conference on Learning Representations (ICLR-26) 2026.

PDF BibTex Slides

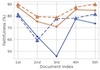

Variation in Verification: Understanding Verification Dynamics in Large Language Models

Yefan Zhou, Austin Xu, Yilun Zhou, Janvijay Singh, Jiang Gui, and Shafiq Joty. In The Fourteenth International Conference on Learning Representations (ICLR-26) 2026.

PDF BibTex Slides

Yefan Zhou, Austin Xu, Yilun Zhou, Janvijay Singh, Jiang Gui, and Shafiq Joty. In The Fourteenth International Conference on Learning Representations (ICLR-26) 2026.

PDF BibTex Slides

On the Shelf Life of Finetuned LLM-Judges: Future Proofing, Backward Compatibility, and Question Generalization

Janvijay Singh, Austin Xu, Yilun Zhou, Yefan Zhou, Dilek Hakkani-Tür, and Shafiq Joty. In The Fourteenth International Conference on Learning Representations (ICLR-26) 2026.

PDF BibTex Slides

Janvijay Singh, Austin Xu, Yilun Zhou, Yefan Zhou, Dilek Hakkani-Tür, and Shafiq Joty. In The Fourteenth International Conference on Learning Representations (ICLR-26) 2026.

PDF BibTex Slides

LiveResearchBench: A Live Benchmark for User-Centric Deep Research in the Wild

Jiayu Wang, Yifei Ming, Riya Dulepet, Qinglin Chen, Austin Xu, Zixuan Ke, Frederic Sala, Aws Albarghouthi, Caiming Xiong, and Shafiq Joty. In The Fourteenth International Conference on Learning Representations (ICLR-26) 2026.

PDF BibTex Slides

Jiayu Wang, Yifei Ming, Riya Dulepet, Qinglin Chen, Austin Xu, Zixuan Ke, Frederic Sala, Aws Albarghouthi, Caiming Xiong, and Shafiq Joty. In The Fourteenth International Conference on Learning Representations (ICLR-26) 2026.

PDF BibTex Slides

SWERank: Software Issue Localization with Code Ranking

Revanth Gangi, Tarun Suresh, JaeHyeok Doo, Ye Liu, Xuan-Phi Nguyen, Yingbo Zhou, Semih Yavuz, Caiming Xiong, Heng Ji, and Shafiq Joty. In The Fourteenth International Conference on Learning Representations (ICLR-26) 2026.

PDF BibTex Slides

Revanth Gangi, Tarun Suresh, JaeHyeok Doo, Ye Liu, Xuan-Phi Nguyen, Yingbo Zhou, Semih Yavuz, Caiming Xiong, Heng Ji, and Shafiq Joty. In The Fourteenth International Conference on Learning Representations (ICLR-26) 2026.

PDF BibTex Slides

References Improve LLM Alignment in Non-Verifiable Domains

Kejian Shi, Yixin Liu, PeiFeng Wang, Alexander Fabbri, Shafiq Joty, and Arman Cohan. In The Fourteenth International Conference on Learning Representations (ICLR-26) 2026.

PDF BibTex Slides

Kejian Shi, Yixin Liu, PeiFeng Wang, Alexander Fabbri, Shafiq Joty, and Arman Cohan. In The Fourteenth International Conference on Learning Representations (ICLR-26) 2026.

PDF BibTex Slides

DashboardQA: Benchmarking Multimodal Agents for Question Answering on Interactive Dashboards

Aaryaman Kartha, Ahmed Masry, Mohammed Saidul, Thinh Lang, Shadikur Rahman, Ridwan Mahbub, Mizanur Rahman, Mahir Ahmed, Md Rizwan, Enamul Hoque, and Shafiq Joty. In In 19th Conference of the European Chapter of the Association for Computational Linguistics (EACL-26) 2026.

PDF BibTex Slides

Aaryaman Kartha, Ahmed Masry, Mohammed Saidul, Thinh Lang, Shadikur Rahman, Ridwan Mahbub, Mizanur Rahman, Mahir Ahmed, Md Rizwan, Enamul Hoque, and Shafiq Joty. In In 19th Conference of the European Chapter of the Association for Computational Linguistics (EACL-26) 2026.

PDF BibTex Slides

Aligning Text, Code, and Vision: A Multi-Objective Reinforcement Learning Framework for Text-to-Visualization

Mizanur Rahman, Mohammed Saidul, Md Tahmid, Shafiq Joty, and Enamul Hoque. In In 19th Conference of the European Chapter of the Association for Computational Linguistics (EACL-26) 2026.

PDF BibTex Slides

Mizanur Rahman, Mohammed Saidul, Md Tahmid, Shafiq Joty, and Enamul Hoque. In In 19th Conference of the European Chapter of the Association for Computational Linguistics (EACL-26) 2026.

PDF BibTex Slides

2025

Beyond Accuracy: Dissecting Mathematical Reasoning for LLMs Under Reinforcement Learning

Jiayu Wang, Yifei Ming, Zixuan Ke, Caiming Xiong, Shafiq Joty, Aws Albarghouthi, and Frederic Sala. In 2025 Conference on Neural Information Processing Systems (NeurIPS'25) 2025.

PDF Abstract BibTex Slides

Jiayu Wang, Yifei Ming, Zixuan Ke, Caiming Xiong, Shafiq Joty, Aws Albarghouthi, and Frederic Sala. In 2025 Conference on Neural Information Processing Systems (NeurIPS'25) 2025.

PDF Abstract BibTex Slides

The Emergence of Abstract Thought in Large Language Models Beyond Any Language

Yuxin Chen, Yiran Zhao, Yang Zhang, Kenji Kawaguchi An Zhang, Shafiq Joty, Junnan Li, Tat-Seng Chua, Michael Qizhe, and Wenxuan Zhang. In 2025 Conference on Neural Information Processing Systems (NeurIPS'25) 2025.

PDF Abstract BibTex Slides

Yuxin Chen, Yiran Zhao, Yang Zhang, Kenji Kawaguchi An Zhang, Shafiq Joty, Junnan Li, Tat-Seng Chua, Michael Qizhe, and Wenxuan Zhang. In 2025 Conference on Neural Information Processing Systems (NeurIPS'25) 2025.

PDF Abstract BibTex Slides

Direct Judgement Preference Optimization

Peifeng Wang, Austin Xu, Yilun Zhou, Caiming Xong, and Shafiq Joty. In Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing (EMNLP-25) 2025.

PDF BibTex Slides

Peifeng Wang, Austin Xu, Yilun Zhou, Caiming Xong, and Shafiq Joty. In Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing (EMNLP-25) 2025.

PDF BibTex Slides

Demystifying Domain-adaptive Post-training for Financial LLMs

Zixuan Ke, Yifei Ming, Xuan-Phi Nguyen, Caiming Xiong, and Shafiq Joty. In Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing (EMNLP-25) 2025.

PDF BibTex Slides

Zixuan Ke, Yifei Ming, Xuan-Phi Nguyen, Caiming Xiong, and Shafiq Joty. In Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing (EMNLP-25) 2025.

PDF BibTex Slides

From Charts to Fair Narratives: Uncovering and Mitigating Geo-Economic Biases in Chart-to-Text

Ridwan Mahbub, Mohammed Saidul, Mir Tafseer, Md Tahmid, Mizanur Rahman, Shafiq Joty, and Enamul Hoque. In Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing (EMNLP-25) 2025.

PDF BibTex Slides

Ridwan Mahbub, Mohammed Saidul, Mir Tafseer, Md Tahmid, Mizanur Rahman, Shafiq Joty, and Enamul Hoque. In Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing (EMNLP-25) 2025.

PDF BibTex Slides

Text2Vis: A Challenging and Diverse Benchmark for Generating Multimodal Visualizations from Text

Mizanur Rahman, Md Tahmid, Shafiq Joty, and Enamul Hoque. In Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing (EMNLP-25) 2025.

PDF BibTex Slides

Mizanur Rahman, Md Tahmid, Shafiq Joty, and Enamul Hoque. In Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing (EMNLP-25) 2025.

PDF BibTex Slides

CEMTM: Contextual Embedding-based Multimodal Topic Modeling

Amirhossein Abaskohi, Raymond Li, Chuyuan Li, Shafiq Joty, and Giuseppe Carenini. In Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing (EMNLP-25) 2025.

BibTex Slides

Amirhossein Abaskohi, Raymond Li, Chuyuan Li, Shafiq Joty, and Giuseppe Carenini. In Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing (EMNLP-25) 2025.

BibTex Slides

Topic-Guided Reinforcement Learning with LLMs for Enhancing Multi-Document Summarization

Chuyuan Li, Austin Xu, Shafiq Joty, and Giuseppe Carenini. In Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing (EMNLP-25 Findings) 2025.

BibTex Slides

Chuyuan Li, Austin Xu, Shafiq Joty, and Giuseppe Carenini. In Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing (EMNLP-25 Findings) 2025.

BibTex Slides

CodeXEmbed: A Generalist Embedding Model Family for Multilingual and Multi-task Code Retrieval

Ye Liu, Rui Meng, Shafiq Joty, Silvio Savarese, Caiming Xiong, Yingbo Zhou, and Semih Yavuz. In Second Conference on Language Modeling (COLM-25) 2025.

PDF BibTex Slides

Ye Liu, Rui Meng, Shafiq Joty, Silvio Savarese, Caiming Xiong, Yingbo Zhou, and Semih Yavuz. In Second Conference on Language Modeling (COLM-25) 2025.

PDF BibTex Slides

Evaluating Judges as Evaluators: The JETTS Benchmark of LLM-as-Judges as Test-Time Scaling Evaluators

Zhou Yilun, Xu Austin, Wang Peifeng, Xiong Caiming, and Joty Shafiq. In International Conference on Machine Learning (ICML-25) 2025.

PDF BibTex Slides

Zhou Yilun, Xu Austin, Wang Peifeng, Xiong Caiming, and Joty Shafiq. In International Conference on Machine Learning (ICML-25) 2025.

PDF BibTex Slides

A Survey of Frontiers in LLM Reasoning: Inference Scaling, Learning to Reason, and Agentic Systems

Zixuan Ke, Fangkai Jiao, Yifei Ming, Xuan-Phi Nguyen, Austin Xu, Do Xuan, Minzhi Li, Chengwei Qin, Peifeng Wang, Silvio Savarese, Caiming Xiong, and Shafiq Joty. In Transactions on Machine Learning Research (TMLR) 2025.

PDF BibTex Slides

Zixuan Ke, Fangkai Jiao, Yifei Ming, Xuan-Phi Nguyen, Austin Xu, Do Xuan, Minzhi Li, Chengwei Qin, Peifeng Wang, Silvio Savarese, Caiming Xiong, and Shafiq Joty. In Transactions on Machine Learning Research (TMLR) 2025.

PDF BibTex Slides

How Much are LLMs Contaminated? A Comprehensive Survey and the LLMSanitize Library

Mathieu Ravaut, Bosheng Ding, Fangkai Jiao, Hailin Chen, Xingxuan Li, Ruochen Zhao, Chengwei Qin, Caiming Xiong, and Shafiq Joty. In Transactions on Machine Learning Research (TMLR) 2025.

PDF Abstract BibTex Slides

Mathieu Ravaut, Bosheng Ding, Fangkai Jiao, Hailin Chen, Xingxuan Li, Ruochen Zhao, Chengwei Qin, Caiming Xiong, and Shafiq Joty. In Transactions on Machine Learning Research (TMLR) 2025.

PDF Abstract BibTex Slides

Generalized Out-of-Distribution Detection and Beyond in Vision Language Model Era: A Survey

Atsuyuki Miyai, Jingkang Yang, Jingyang Zhang, Yifei Ming, Yueqian Lin, Qing Yu, Go Irie, Shafiq Joty, Yixuan Li, Hai Li, Ziwei Liu, Toshihiko Yamasaki, and Kiyoharu Aizawa. In Transactions on Machine Learning Research (TMLR) 2025.

PDF BibTex Slides

Atsuyuki Miyai, Jingkang Yang, Jingyang Zhang, Yifei Ming, Yueqian Lin, Qing Yu, Go Irie, Shafiq Joty, Yixuan Li, Hai Li, Ziwei Liu, Toshihiko Yamasaki, and Kiyoharu Aizawa. In Transactions on Machine Learning Research (TMLR) 2025.

PDF BibTex Slides

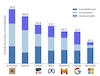

Does Context Matter? ContextualJudgeBench for Evaluating LLM-based Judges in Contextual Settings

Austin Xu, Srijan Bansal, Yifei Ming, Semih Yavuz, and Shafiq Joty. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25) 2025.

PDF BibTex Slides

Austin Xu, Srijan Bansal, Yifei Ming, Semih Yavuz, and Shafiq Joty. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25) 2025.

PDF BibTex Slides

What Makes a Good Natural Language Prompt?

Do-Xuan Long, Duy Dinh, Ngoc-Hai Nguyen, Kenji Kawaguchi, Nancy Chen, Shafiq Joty, and Min-Yen Kan. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25) 2025.

BibTex Slides

Do-Xuan Long, Duy Dinh, Ngoc-Hai Nguyen, Kenji Kawaguchi, Nancy Chen, Shafiq Joty, and Min-Yen Kan. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25) 2025.

BibTex Slides

Can We Further Elicit Reasoning in LLMs? Critic-Guided Planning with Retrieval-Augmentation for Solving Challenging Tasks

Xingxuan Li, Weiwen Xu, Ruochen Zhao, Fangkai Jiao, Shafiq Joty, and Lidong Bing. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25) 2025.

PDF BibTex Slides

Xingxuan Li, Weiwen Xu, Ruochen Zhao, Fangkai Jiao, Shafiq Joty, and Lidong Bing. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25) 2025.

PDF BibTex Slides

Beyond Output Matching: Bidirectional Alignment for Enhanced In-Context Learning

Chengwei Qin, Wenhan Xia, Fangkai Jiao, Chen Chen, Yuchen Hu, Bosheng Ding, Ruirui Chen, and Shafiq Joty. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25) 2025.

PDF BibTex Slides

Chengwei Qin, Wenhan Xia, Fangkai Jiao, Chen Chen, Yuchen Hu, Bosheng Ding, Ruirui Chen, and Shafiq Joty. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25) 2025.

PDF BibTex Slides

Learning Auxiliary Tasks Improves Reference-Free Hallucination Detection in Open-Domain Long-Form Generation

Chengwei Qin, Wenxuan Zhou, Karthik Sankararaman, Nanshu Wang, Tengyu Xu, Alexander Radovic, Eryk Helenowski, Arya Talebzadeh, Aditya Tayade, Sinong Wang, Shafiq Joty, Han Fang, and Hao Ma. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25) 2025.

PDF BibTex Slides

Chengwei Qin, Wenxuan Zhou, Karthik Sankararaman, Nanshu Wang, Tengyu Xu, Alexander Radovic, Eryk Helenowski, Arya Talebzadeh, Aditya Tayade, Sinong Wang, Shafiq Joty, Han Fang, and Hao Ma. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25) 2025.

PDF BibTex Slides

ChartQAPro: A More Diverse and Challenging Benchmark for Chart Question Answering

Ahmed Masry, Mohammed Islam, Mahir Ahmed, Aayush Bajaj, Firoz Kabir, Aaryaman Kartha, Tahmid Laskar, Mizanur Rahman, Shadikur Rahman, Mehrad Shahmohammadi, Megh Thakkar, Rizwan Parvez, Enamul Hoque, and Shafiq Joty. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25 Findings) 2025.

PDF BibTex Slides

Ahmed Masry, Mohammed Islam, Mahir Ahmed, Aayush Bajaj, Firoz Kabir, Aaryaman Kartha, Tahmid Laskar, Mizanur Rahman, Shadikur Rahman, Mehrad Shahmohammadi, Megh Thakkar, Rizwan Parvez, Enamul Hoque, and Shafiq Joty. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25 Findings) 2025.

PDF BibTex Slides

Relevant or Random: Can LLMs Truly Perform Analogical Reasoning?

Chengwei Qin, Wenhan Xia, Tan Wang, Fangkai Jiao, Yuchen Hu, Bosheng Ding, Ruirui Chen, and Shafiq Joty. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25 Findings) 2025.

PDF BibTex Slides

Chengwei Qin, Wenhan Xia, Tan Wang, Fangkai Jiao, Yuchen Hu, Bosheng Ding, Ruirui Chen, and Shafiq Joty. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25 Findings) 2025.

PDF BibTex Slides

Beyond In-Context Learning: Aligning Long-form Generation of Large Language Models via Task-Inherent Attribute Guidelines

Do Xuan, Duong Yen, Do Xuan, Anh Tuan, Kenji Kawaguchi, Shafiq Joty, Min-Yen Kan, and Nancy Chen. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25 Findings) 2025.

PDF BibTex Slides

Do Xuan, Duong Yen, Do Xuan, Anh Tuan, Kenji Kawaguchi, Shafiq Joty, Min-Yen Kan, and Nancy Chen. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25 Findings) 2025.

PDF BibTex Slides

Why Vision Language Models Struggle with Visual Arithmetic? Towards Enhanced Chart and Geometry Understanding

Kung-Hsiang Huang, Can Qin, Haoyi Qiu, Philippe Laban, Shafiq Joty, Caiming Xiong, and Chien-Sheng Wu. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25 Findings) 2025.

PDF BibTex Slides

Kung-Hsiang Huang, Can Qin, Haoyi Qiu, Philippe Laban, Shafiq Joty, Caiming Xiong, and Chien-Sheng Wu. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25 Findings) 2025.

PDF BibTex Slides

Judging the Judges: Can Large Vision-Language Models Fairly Evaluate Chart Comprehension and Reasoning?

Tahmid Laskar, Mohammed Islam, Ridwan Mahbub, Ahmed Masry, Mizanur Rahman, Amran Bhuiyan, Mir Nayeem, Shafiq Joty, Enamul Hoque, and Jimmy Huang. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25 (Industry track)) 2025.

PDF BibTex Slides

Tahmid Laskar, Mohammed Islam, Ridwan Mahbub, Ahmed Masry, Mizanur Rahman, Amran Bhuiyan, Mir Nayeem, Shafiq Joty, Enamul Hoque, and Jimmy Huang. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL-25 (Industry track)) 2025.

PDF BibTex Slides

Preference Optimization for Reasoning with Pseudo Feedback

Fangkai Jiao, Geyang Guo, Xingxing Zhang, Nancy F., Shafiq Joty, and Furu Wei. In International Conference on Learning Representations (ICLR-25 spotlight) 2025.

PDF BibTex Slides

Fangkai Jiao, Geyang Guo, Xingxing Zhang, Nancy F., Shafiq Joty, and Furu Wei. In International Conference on Learning Representations (ICLR-25 spotlight) 2025.

PDF BibTex Slides

FaithEval: Can Your Language Model Stay Faithful to Context, Even If "The Moon is Made of Marshmallows"

Yifei Ming, Senthil Purushwalkam, Shrey Pandit, Zixuan Ke, Xuan-Phi Nguyen, Caiming Xiong, and Shafiq Joty. In International Conference on Learning Representations (ICLR-25) 2025.

PDF BibTex Slides

Yifei Ming, Senthil Purushwalkam, Shrey Pandit, Zixuan Ke, Xuan-Phi Nguyen, Caiming Xiong, and Shafiq Joty. In International Conference on Learning Representations (ICLR-25) 2025.

PDF BibTex Slides

On Positional Bias of Faithfulness for Long-form Summarization

David Wan, Jesse Vig, Mohit Bansal, and Shafiq Joty. In 2025 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-25) 2025.

PDF Abstract BibTex Slides

David Wan, Jesse Vig, Mohit Bansal, and Shafiq Joty. In 2025 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-25) 2025.

PDF Abstract BibTex Slides

ReIFE: Re-evaluating Instruction-Following Evaluation

Yixin Liu, Kejian Shi, Alexander Fabbri, Yilun Zhao, Peifeng Wang, Chien-Sheng Wu, Shafiq Joty, and Arman Cohan. In 2025 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-25) 2025.

PDF Abstract BibTex Slides

Yixin Liu, Kejian Shi, Alexander Fabbri, Yilun Zhao, Peifeng Wang, Chien-Sheng Wu, Shafiq Joty, and Arman Cohan. In 2025 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-25) 2025.

PDF Abstract BibTex Slides

ParaICL: Towards Robust Parallel In-Context Learning

Xingxuan Li, Xuan-Phi Nguyen, Shafiq Joty, and Lidong Bing. In 2025 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-25) 2025.

PDF Abstract BibTex Slides

Xingxuan Li, Xuan-Phi Nguyen, Shafiq Joty, and Lidong Bing. In 2025 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-25) 2025.

PDF Abstract BibTex Slides

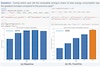

LLMs Are Biased Towards Output Formats! Systematically Evaluating and Mitigating Output Format Bias of LLMs

Do Long, Hai Ngoc, Tiviatis Sim, Hieu Dao, Shafiq Joty, Kenji Kawaguchi, Nancy Chen, and Min-Yen Kan. In 2025 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-25) 2025.

PDF Abstract BibTex Slides

Do Long, Hai Ngoc, Tiviatis Sim, Hieu Dao, Shafiq Joty, Kenji Kawaguchi, Nancy Chen, and Min-Yen Kan. In 2025 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-25) 2025.

PDF Abstract BibTex Slides

Adaptation of Large Language Models

Zixuan Ke, Yifei Ming, and Shafiq Joty. In 2025 Annual Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 5: Tutorial Abstracts) (NAACL-25) , pages 30-37, 2025.

PDF Abstract BibTex Slides

Zixuan Ke, Yifei Ming, and Shafiq Joty. In 2025 Annual Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 5: Tutorial Abstracts) (NAACL-25) , pages 30-37, 2025.

PDF Abstract BibTex Slides

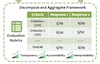

DnA-Eval: Enhancing Large Language Model Evaluation through Decomposition and Aggregation

Minzhi Li, Zhengyuan Liu, Shumin Deng, Shafiq Joty, Nancy Chen, and Min-Yen Kan. In Proceedings of the 31st International Conference on Computational Linguistics (COLING-25) 2025.

PDF Abstract BibTex Slides

Minzhi Li, Zhengyuan Liu, Shumin Deng, Shafiq Joty, Nancy Chen, and Min-Yen Kan. In Proceedings of the 31st International Conference on Computational Linguistics (COLING-25) 2025.

PDF Abstract BibTex Slides

ChartGemma: Visual Instruction-tuning for Chart Reasoning in the Wild

Ahmed Masry, Megh Thakkar, Aayush Bajaj, Aaryaman Kartha, Enamul Hoque, and Shafiq Joty. In Proceedings of the 31st International Conference on Computational Linguistics (COLING-25) 2025.

PDF Abstract BibTex Slides

Ahmed Masry, Megh Thakkar, Aayush Bajaj, Aaryaman Kartha, Enamul Hoque, and Shafiq Joty. In Proceedings of the 31st International Conference on Computational Linguistics (COLING-25) 2025.

PDF Abstract BibTex Slides

2024

Learning Planning-based Reasoning by Trajectories Collection and Process Reward Synthesizing (Outstanding paper award)

Fangkai Jiao, Chengwei Qin, Zhengyuan Liu, Nancy Chen, and Shafiq Joty. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP'24) 2024.

PDF Abstract BibTex Slides

Fangkai Jiao, Chengwei Qin, Zhengyuan Liu, Nancy Chen, and Shafiq Joty. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP'24) 2024.

PDF Abstract BibTex Slides

DataNarrative: Automated Data-Driven Storytelling with Visualizations and Texts

Mohammed Islam, Md Laskar, Md Parvez, Enamul Hoque, and Shafiq Joty. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP'24) 2024.

PDF Abstract BibTex Slides

Mohammed Islam, Md Laskar, Md Parvez, Enamul Hoque, and Shafiq Joty. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP'24) 2024.

PDF Abstract BibTex Slides

Evaluating Psychological Safety of Large Language Models

Xingxuan Li, Yutong Li, Lin Qiu, Shafiq Joty, and Lidong Bing. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP'24) 2024.

PDF Abstract BibTex Slides

Xingxuan Li, Yutong Li, Lin Qiu, Shafiq Joty, and Lidong Bing. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP'24) 2024.

PDF Abstract BibTex Slides

FOLIO: Natural Language Reasoning with First-Order Logic

SIMENG HAN, Hailey Schoelkopf, Yilun Zhao, Zhenting Qi, Martin Riddell, Wenfei Zhou, James Coady, David Peng, Yujie Qiao, Luke Benson, Lucy Sun, Alexander Wardle-Solano, Hannah Szabó, Ekaterina Zubova, Matthew Burtell, Jonathan Fan, Yixin Liu, Brian Wong, Malcolm Sailor, Ansong Ni, Linyong Nan, Jungo Kasai, Tao Yu, Rui Zhang, Alexander Fabbri, Wojciech Maciej, Semih Yavuz, Ye Liu, Victoria Lin, Shafiq Joty, Yingbo Zhou, Caiming Xiong, Rex Ying, Arman Cohan, and Dragomir Radev. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP'24) 2024.

PDF Abstract BibTex Slides

SIMENG HAN, Hailey Schoelkopf, Yilun Zhao, Zhenting Qi, Martin Riddell, Wenfei Zhou, James Coady, David Peng, Yujie Qiao, Luke Benson, Lucy Sun, Alexander Wardle-Solano, Hannah Szabó, Ekaterina Zubova, Matthew Burtell, Jonathan Fan, Yixin Liu, Brian Wong, Malcolm Sailor, Ansong Ni, Linyong Nan, Jungo Kasai, Tao Yu, Rui Zhang, Alexander Fabbri, Wojciech Maciej, Semih Yavuz, Ye Liu, Victoria Lin, Shafiq Joty, Yingbo Zhou, Caiming Xiong, Rex Ying, Arman Cohan, and Dragomir Radev. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP'24) 2024.

PDF Abstract BibTex Slides

A Systematic Survey and Critical Review on Evaluating Large Language Models: Challenges, Limitations, and Recommendations

Md Laskar, Sawsan Alqahtani, M Bari, Mizanur Rahman, Mohammad Khan, Haidar Khan, Israt Jahan, Amran Bhuiyan, Chee Tan, Md Parvez, Enamul Hoque, Shafiq Joty, and Jimmy Huang. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP'24) 2024.

PDF Abstract BibTex Slides

Md Laskar, Sawsan Alqahtani, M Bari, Mizanur Rahman, Mohammad Khan, Haidar Khan, Israt Jahan, Amran Bhuiyan, Chee Tan, Md Parvez, Enamul Hoque, Shafiq Joty, and Jimmy Huang. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP'24) 2024.

PDF Abstract BibTex Slides

Open-RAG: Enhanced Retrieval Augmented Reasoning with Open-Source Large Language Models

Shayekh Islam, Md Rahman, K Hossain, Enamul Hoque, Shafiq Joty, and Md Parvez. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP'24 Findings) 2024.

PDF Abstract BibTex Slides

Shayekh Islam, Md Rahman, K Hossain, Enamul Hoque, Shafiq Joty, and Md Parvez. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP'24 Findings) 2024.

PDF Abstract BibTex Slides

Investigating the prompt leakage effect and black-box defenses for multi-turn LLM interactions

Divyansh Agarwal, Alexander Fabbri, Philippe Laban, Shafiq Joty, Caiming Xiong, and Chien-Sheng Wu. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP'24 Industry Track) 2024.

PDF Abstract BibTex Slides

Divyansh Agarwal, Alexander Fabbri, Philippe Laban, Shafiq Joty, Caiming Xiong, and Chien-Sheng Wu. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP'24 Industry Track) 2024.

PDF Abstract BibTex Slides

P-FOLIO: Evaluating and Improving Logical Reasoning with Abundant Human-Written Reasoning Chains

SIMENG HAN, Aaron Yu, Rui Shen, Zhenting Qi, Martin Riddell, Wenfei Zhou, Yujie Qiao, Yilun Zhao, Semih Yavuz, Ye Liu, Shafiq Joty, Yingbo Zhou, Caiming Xiong, Rex Ying, Arman Cohan, and Dragomir Radev. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP'24 Findings) 2024.

PDF Abstract BibTex Slides

SIMENG HAN, Aaron Yu, Rui Shen, Zhenting Qi, Martin Riddell, Wenfei Zhou, Yujie Qiao, Yilun Zhao, Semih Yavuz, Ye Liu, Shafiq Joty, Yingbo Zhou, Caiming Xiong, Rex Ying, Arman Cohan, and Dragomir Radev. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP'24 Findings) 2024.

PDF Abstract BibTex Slides

X-InstructBLIP: A Framework for aligning X-Modal instruction-aware representations to LLMs and Emergent Cross-modal Reasoning

Artemis Panagopoulou, Le Xue, Ning Yu, Junnan Li, Dongxu Li, Shafiq Joty, Ran Xu, Silvio Savarese, Caiming Xiong, and Juan-Carlos Niebles. In 2024 European Conference on Computer Vision (ECCV'24) 2024.

PDF Abstract BibTex Slides

Artemis Panagopoulou, Le Xue, Ning Yu, Junnan Li, Dongxu Li, Shafiq Joty, Ran Xu, Silvio Savarese, Caiming Xiong, and Juan-Carlos Niebles. In 2024 European Conference on Computer Vision (ECCV'24) 2024.

PDF Abstract BibTex Slides

XCodeEval: An Execution-based Large Scale Multilingual Multitask Benchmark for Code Understanding, Generation, Translation and Retrieval

Mohammad Khan, M Bari, Do Long, Weishi Wang, Md Parvez, and Shafiq Joty. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL'24) 2024.

PDF Abstract BibTex Slides

Mohammad Khan, M Bari, Do Long, Weishi Wang, Md Parvez, and Shafiq Joty. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL'24) 2024.

PDF Abstract BibTex Slides

On Context Utilization in Summarization with Large Language Models

Mathieu Ravaut, Aixin Sun, Nancy Chen, and Shafiq Joty. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL'24) 2024.

PDF Abstract BibTex Slides

Mathieu Ravaut, Aixin Sun, Nancy Chen, and Shafiq Joty. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL'24) 2024.

PDF Abstract BibTex Slides

Democratizing LLMs for Low-Resource Languages by Leveraging their English Dominant Abilities with Linguistically-Diverse Prompts

Xuan-Phi Nguyen, Mahani Aljunied, Shafiq Joty, and Lidong Bing. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL'24) 2024.

PDF Abstract BibTex Slides

Xuan-Phi Nguyen, Mahani Aljunied, Shafiq Joty, and Lidong Bing. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL'24) 2024.

PDF Abstract BibTex Slides

ChartInstruct: Instruction Tuning for Chart Comprehension and Reasoning

Ahmed Masry, Mehrad Shahmohammadi, Md Parvez, Enamul Hoque, and Shafiq Joty. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL'24 Findings) 2024.

PDF Abstract BibTex Slides

Ahmed Masry, Mehrad Shahmohammadi, Md Parvez, Enamul Hoque, and Shafiq Joty. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL'24 Findings) 2024.

PDF Abstract BibTex Slides

Data Augmentation using LLMs: Methods, Learning Paradigms and Challenges

Bosheng Ding, Chengwei Qin, Ruochen Zhao, Tianze Luo, Xinze Li, Guizhen Chen, Wenhan Xia, Junjie Hu, Anh-Tuan Luu, and Shafiq Joty. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL'24 Findings) 2024.

PDF Abstract BibTex Slides

Bosheng Ding, Chengwei Qin, Ruochen Zhao, Tianze Luo, Xinze Li, Guizhen Chen, Wenhan Xia, Junjie Hu, Anh-Tuan Luu, and Shafiq Joty. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL'24 Findings) 2024.

PDF Abstract BibTex Slides

XL-HeadTags: Leveraging Multimodal Retrieval Augmentation for the Multilingual Generation of News Headlines and Tags

Faisal Shohan, Mir Nayeem, Samsul Islam, Abu Akash, and Shafiq Joty. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL'24 Findings) 2024.

Abstract BibTex Slides

Faisal Shohan, Mir Nayeem, Samsul Islam, Abu Akash, and Shafiq Joty. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL'24 Findings) 2024.

Abstract BibTex Slides

Embrace Divergence for Richer Insights: A Multi-document Summarization Benchmark and a Case Study on Summarizing Diverse Information from News Articles

Kung-Hsiang Huang, Philippe Laban, Alexander Fabbri, Prafulla Kumar, Shafiq Joty, Caiming Xiong, and Chien-Sheng Wu. In 2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-24) 2024.

PDF Abstract BibTex Slides

Kung-Hsiang Huang, Philippe Laban, Alexander Fabbri, Prafulla Kumar, Shafiq Joty, Caiming Xiong, and Chien-Sheng Wu. In 2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-24) 2024.

PDF Abstract BibTex Slides

Exploring Self-supervised Logic-enhanced Training for Large Language Models

Fangkai Jiao, Zhiyang Teng, Bosheng Ding, Zhengyuan Liu, Nancy Chen, and Shafiq Joty. In 2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-24) 2024.

PDF Abstract BibTex Slides

Fangkai Jiao, Zhiyang Teng, Bosheng Ding, Zhengyuan Liu, Nancy Chen, and Shafiq Joty. In 2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-24) 2024.

PDF Abstract BibTex Slides

Lifelong Event Detection with Embedding Space Separation and Compaction

Chengwei Qin, Ruirui Chen, Ruochen Zhao, Wenhan Xia, and Shafiq Joty. In 2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-24) 2024.

PDF Abstract BibTex Slides

Chengwei Qin, Ruirui Chen, Ruochen Zhao, Wenhan Xia, and Shafiq Joty. In 2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-24) 2024.

PDF Abstract BibTex Slides

Benchmarking Generation and Evaluation Capabilities of Large Language Models for Instruction Controllable Summarization

Yixin Liu, Alexander Fabbri, Jiawen Chen, Yilun Zhao, SIMENG HAN, Shafiq Joty, Pengfei Liu, Dragomir Radev, Chien-Sheng Wu, and Arman Cohan. In 2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-24 Findings) 2024.

PDF Abstract BibTex Slides

Yixin Liu, Alexander Fabbri, Jiawen Chen, Yilun Zhao, SIMENG HAN, Shafiq Joty, Pengfei Liu, Dragomir Radev, Chien-Sheng Wu, and Arman Cohan. In 2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-24 Findings) 2024.

PDF Abstract BibTex Slides

AdaPT: A Set of Guidelines for Hyperbolic Multimodal Multilingual NLP

Ramit Sawhney, Megh Thakkar, Vishwa Shah, Shrey Pandit, and Shafiq Joty. In 2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-24 Findings) 2024.

PDF Abstract BibTex Slides

Ramit Sawhney, Megh Thakkar, Vishwa Shah, Shrey Pandit, and Shafiq Joty. In 2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-24 Findings) 2024.

PDF Abstract BibTex Slides

DIVKNOWQA: Assessing the Reasoning Ability of LLMs via Open-Domain Question Answering over Knowledge Base and Text

Wenting Zhao, Ye Liu, Tong Niu, Yao Wan, Philip Yu, Shafiq Joty, Yingbo Zhou, and Semih Yavuz. In 2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-24 Findings) 2024.

PDF Abstract BibTex Slides

Wenting Zhao, Ye Liu, Tong Niu, Yao Wan, Philip Yu, Shafiq Joty, Yingbo Zhou, and Semih Yavuz. In 2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-24 Findings) 2024.

PDF Abstract BibTex Slides

Diffusion Model Alignment Using Direct Preference Optimization

Bram Wallace, Meihua Dang, Rafael Rafailov, Linqi Zhou, Aaron Lou, Senthil Purushwalkam, Stefano Ermon, Caiming Xiong, Shafiq Joty, and Nikhil Naik. In International Conference on Computer Vision and Pattern Recognition (CVPR-24) 2024.

PDF Abstract BibTex Slides

Bram Wallace, Meihua Dang, Rafael Rafailov, Linqi Zhou, Aaron Lou, Senthil Purushwalkam, Stefano Ermon, Caiming Xiong, Shafiq Joty, and Nikhil Naik. In International Conference on Computer Vision and Pattern Recognition (CVPR-24) 2024.

PDF Abstract BibTex Slides

CodeChain: Towards Modular Code Generation Through Chain of Self-revisions with Representative Sub-modules

Hung Le, Hailin Chen, Amrita Saha, Akash Gokul, Doyen Sahoo, and Shafiq Joty. In International Conference on Learning Representations (ICLR-24) 2024.

PDF Abstract BibTex Slides

Hung Le, Hailin Chen, Amrita Saha, Akash Gokul, Doyen Sahoo, and Shafiq Joty. In International Conference on Learning Representations (ICLR-24) 2024.

PDF Abstract BibTex Slides

Chain of Knowledge: A Framework for Grounding Large Language Models with Structured Knowledge Bases

Xingxuan Li, Ruochen Zhao, Yew Ken, Bosheng Ding, Shafiq Joty, Soujanya Poria, and Lidong Bing. In International Conference on Learning Representations (ICLR-24) 2024.

PDF Abstract BibTex Slides

Xingxuan Li, Ruochen Zhao, Yew Ken, Bosheng Ding, Shafiq Joty, Soujanya Poria, and Lidong Bing. In International Conference on Learning Representations (ICLR-24) 2024.

PDF Abstract BibTex Slides

L2CEval: Evaluating Language-to-Code Generation Capabilities of Large Language Models

Ansong Ni, Pengcheng Yin, Yilun Zhao, Martin Riddell, Troy Feng, Rui Shen, Stephen Yin, Ye Liu, Semih Yavuz, Caiming Xiong, Shafiq Joty, Yingbo Zhou, Dragomir Radev, and Arman Cohan. In Transactions of ACL (TACL) 2024.

PDF Abstract BibTex

Ansong Ni, Pengcheng Yin, Yilun Zhao, Martin Riddell, Troy Feng, Rui Shen, Stephen Yin, Ye Liu, Semih Yavuz, Caiming Xiong, Shafiq Joty, Yingbo Zhou, Dragomir Radev, and Arman Cohan. In Transactions of ACL (TACL) 2024.

PDF Abstract BibTex

From Pixels to Insights: A Survey on Automatic Chart Understanding in the Era of Large Foundation Models

Kung-Hsiang Huang, Hou Chan, Yi Fung, Haoyi Qiu, Mingyang Zhou, Shafiq Joty, Shih-Fu Chang, and Heng Ji. In IEEE Transactions on Knowledge and Data Engineering (TKDE) 2024.

PDF Abstract BibTex

Kung-Hsiang Huang, Hou Chan, Yi Fung, Haoyi Qiu, Mingyang Zhou, Shafiq Joty, Shih-Fu Chang, and Heng Ji. In IEEE Transactions on Knowledge and Data Engineering (TKDE) 2024.

PDF Abstract BibTex

Improving conversational recommender system via contextual and time-aware modeling with less domain-specific knowledge

Lingzhi Wang, Shafiq Joty, Wei Gao, Xingshan Zeng, and Kam-Fai Wong. In IEEE Transactions on Knowledge and Data Engineering (TKDE) 2024.

PDF Abstract BibTex

Lingzhi Wang, Shafiq Joty, Wei Gao, Xingshan Zeng, and Kam-Fai Wong. In IEEE Transactions on Knowledge and Data Engineering (TKDE) 2024.

PDF Abstract BibTex

Explaining Language Model Predictions with High-Impact Concepts

Ruochen Zhao, Shafiq Joty, Yongjie Wang, and Tan Wang. In Findings of ACL (EACL-24) 2024.

PDF Abstract BibTex Slides

Ruochen Zhao, Shafiq Joty, Yongjie Wang, and Tan Wang. In Findings of ACL (EACL-24) 2024.

PDF Abstract BibTex Slides

Efficiently Aligned Cross-Lingual Transfer Learning for Conversational Tasks using Prompt-Tuning

Lifu Tu, Jin Qu, Semih Yavuz, Shafiq Joty, Wenhao Liu, Caiming Xiong, and Yingbo Zhou. In In Findings of ACL (EACL-24) 2024.

PDF Abstract BibTex Slides

Lifu Tu, Jin Qu, Semih Yavuz, Shafiq Joty, Wenhao Liu, Caiming Xiong, and Yingbo Zhou. In In Findings of ACL (EACL-24) 2024.

PDF Abstract BibTex Slides

2023

Personalized Distillation: Empowering Open-Sourced LLMs with Adaptive Learning for Code Generation

Hailin Chen, Amrita Saha, Shafiq Joty, and Steven Hoi. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP'23) 2023.

Abstract BibTex Slides

Hailin Chen, Amrita Saha, Shafiq Joty, and Steven Hoi. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP'23) 2023.

Abstract BibTex Slides

Lifelong Sequence Generation with Dynamic Module Expansion and Adaptation

Chengwei Qin, Shafiq Joty, and CHEN CHEN. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP'23) 2023.

Abstract BibTex Slides

Chengwei Qin, Shafiq Joty, and CHEN CHEN. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP'23) 2023.

Abstract BibTex Slides

UniChart: A Universal Vision-language Pretrained Model for Chart Comprehension and Reasoning

Ahmed Masry, Parsa Kavehzadeh, Do Long, Enamul Hoque, and Shafiq Joty. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP'23) 2023.

PDF Abstract BibTex Slides

Ahmed Masry, Parsa Kavehzadeh, Do Long, Enamul Hoque, and Shafiq Joty. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP'23) 2023.

PDF Abstract BibTex Slides

Towards Low-Resource Automatic Program Repair with Meta-Learning and Pretrained Language Models

Weishi Wang, Yue Wang, Shafiq Joty, and Steven Hoi. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP'23) 2023.

Abstract BibTex Slides

Weishi Wang, Yue Wang, Shafiq Joty, and Steven Hoi. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP'23) 2023.

Abstract BibTex Slides

SummEdits: Measuring LLM Ability at Factual Reasoning Through The Lens of Summarization

Philippe Laban, Wojciech Kryscinski, Divyansh Agarwal, Alexander Fabbri, Caiming Xiong, Shafiq Joty, and Chien-Sheng Wu. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP'23) 2023.

PDF Abstract BibTex Slides

Philippe Laban, Wojciech Kryscinski, Divyansh Agarwal, Alexander Fabbri, Caiming Xiong, Shafiq Joty, and Chien-Sheng Wu. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP'23) 2023.

PDF Abstract BibTex Slides

Towards Interpretable and Efficient Automatic Reference-Based Summarization Evaluation

Yixin Liu, Alex Fabbri, Pengfei Liu, Shafiq Joty, Chien-Sheng Wu, Caiming Xiong, and Dragomir Radev. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP'23) 2023.

PDF Abstract BibTex Slides

Yixin Liu, Alex Fabbri, Pengfei Liu, Shafiq Joty, Chien-Sheng Wu, Caiming Xiong, and Dragomir Radev. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP'23) 2023.

PDF Abstract BibTex Slides

Retrieving Multimodal Information for Augmented Generation: A Survey

Ruochen Zhao, Hailin Chen, Weishi Wang, Fangkai Jiao, Do Long, Chengwei Qin, Bosheng Ding, Xiaobao Guo, Minzhi Li, Xingxuan Li, and Shafiq Joty. In Findings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP'23 Findings) 2023.

PDF Abstract BibTex Slides

Ruochen Zhao, Hailin Chen, Weishi Wang, Fangkai Jiao, Do Long, Chengwei Qin, Bosheng Ding, Xiaobao Guo, Minzhi Li, Xingxuan Li, and Shafiq Joty. In Findings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP'23 Findings) 2023.

PDF Abstract BibTex Slides

HPE: Answering Complex Questions over Text by Hybrid Question Parsing and Execution

Ye Liu, Semih Yavuz, Rui Meng, Dragomir Radev, Caiming Xiong, Shafiq Joty, and Yingbo Zhou. In Findings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP'23 Findings) 2023.

Abstract BibTex Slides

Ye Liu, Semih Yavuz, Rui Meng, Dragomir Radev, Caiming Xiong, Shafiq Joty, and Yingbo Zhou. In Findings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP'23 Findings) 2023.

Abstract BibTex Slides

Verify-and-Edit: A Knowledge-Enhanced Chain-of-Thought Framework

Ruochen Zhao, Xingxuan Li, Shafiq Joty, Chengwei Qin, and Lidong Bing. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23) 2023.

PDF Abstract BibTex Slides

Ruochen Zhao, Xingxuan Li, Shafiq Joty, Chengwei Qin, and Lidong Bing. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23) 2023.

PDF Abstract BibTex Slides

Did You Read the Instructions? Rethinking the Effectiveness of Task Definitions in Instruction Learning

Fan Yin, Jesse Vig, Philippe Laban, Shafiq Joty, Caiming Xiong, and Chien-Sheng Jason. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23) 2023.

Abstract BibTex Slides

Fan Yin, Jesse Vig, Philippe Laban, Shafiq Joty, Caiming Xiong, and Chien-Sheng Jason. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23) 2023.

Abstract BibTex Slides

Revisiting the Gold Standard: Grounding Summarization Evaluation with Robust Human Evaluation

Yixin Liu, Alex Fabbri, Pengfei Liu, Yilun Zhao, Linyong Nan, Ruilin Han, Simeng Han, Shafiq Joty, Chien-Sheng Jason, Caiming Xiong, and Dragomir Radev. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23) 2023.

PDF Abstract BibTex Slides

Yixin Liu, Alex Fabbri, Pengfei Liu, Yilun Zhao, Linyong Nan, Ruilin Han, Simeng Han, Shafiq Joty, Chien-Sheng Jason, Caiming Xiong, and Dragomir Radev. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23) 2023.

PDF Abstract BibTex Slides

SWiPE: A Dataset for Document-Level Simplification of Wikipedia Pages

Philippe Laban, Jesse Vig, Wojciech Kryscinski, Shafiq Joty, Caiming Xiong, and Chien-Sheng Jason. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23) 2023.

PDF Abstract BibTex Slides

Philippe Laban, Jesse Vig, Wojciech Kryscinski, Shafiq Joty, Caiming Xiong, and Chien-Sheng Jason. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23) 2023.

PDF Abstract BibTex Slides

Randomized Smoothing with Masked Inference for Adversarially Robust Text Classification

Han Cheol, Shafiq Joty, Ruochen Zhao, Megh Thakkar, and Chi Xu. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23) 2023.

PDF Abstract BibTex Slides

Han Cheol, Shafiq Joty, Ruochen Zhao, Megh Thakkar, and Chi Xu. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23) 2023.

PDF Abstract BibTex Slides

Learning to Initialize: Can Meta Learning Improve Cross-task Generalization in Prompt Tuning?

Chengwei Qin, Shafiq Joty, Qian Li, and Ruochen Zhao. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23) 2023.

PDF Abstract BibTex Slides

Chengwei Qin, Shafiq Joty, Qian Li, and Ruochen Zhao. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23) 2023.

PDF Abstract BibTex Slides

Towards Robust Low-Resource Fine-Tuning with Multi-View Compressed Representations

Linlin Liu, Xingxuan Li, Megh Thakkar, Xin Li, Shafiq Joty, Luo Si, and Lidong Bing. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23) 2023.

PDF Abstract BibTex Slides

Linlin Liu, Xingxuan Li, Megh Thakkar, Xin Li, Shafiq Joty, Luo Si, and Lidong Bing. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23) 2023.

PDF Abstract BibTex Slides

Is GPT-3 a Good Data Annotator?

BOSHENG DING, Chengwei Qin, Linlin Liu, Yew Ken, Lidong Bing, Boyang Li, and Shafiq Joty. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23) 2023.

PDF Abstract BibTex Slides

BOSHENG DING, Chengwei Qin, Linlin Liu, Yew Ken, Lidong Bing, Boyang Li, and Shafiq Joty. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23) 2023.

PDF Abstract BibTex Slides

Modeling What-to-ask and How-to-ask for Answer-unaware Conversational Question Generation

Xuan Long, Bowei Zou, Shafiq Joty, Tran Tai, Liangming Pan, Nancy Chen, and Ai Ti. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23) 2023.

PDF Abstract BibTex Slides

Xuan Long, Bowei Zou, Shafiq Joty, Tran Tai, Liangming Pan, Nancy Chen, and Ai Ti. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23) 2023.

PDF Abstract BibTex Slides

A Systematic Study of ChatGPT on Benchmark Datasets

Md Tahmid, M Saiful, Mizanur Rahman, Md Amran, Shafiq Joty, and Jimmy Huang. In Findings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23 Findings) 2023.

PDF Abstract BibTex Slides

Md Tahmid, M Saiful, Mizanur Rahman, Md Amran, Shafiq Joty, and Jimmy Huang. In Findings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23 Findings) 2023.

PDF Abstract BibTex Slides

Contrastive Learning with Generated Representations for Inductive Knowledge Graph Embedding

Qian Li, Shafiq Joty, Daling Wang, Shi Feng, Yifei Zhang, and Chengwei Qin. In Findings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23 Findings) 2023.

Abstract BibTex Slides

Qian Li, Shafiq Joty, Daling Wang, Shi Feng, Yifei Zhang, and Chengwei Qin. In Findings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23 Findings) 2023.

Abstract BibTex Slides

Unsupervised Summarization Re-ranking

Mathieu Ravaut, Shafiq Joty, and Nancy Chen. In Findings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23 Findings) 2023.

PDF Abstract BibTex Slides

Mathieu Ravaut, Shafiq Joty, and Nancy Chen. In Findings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL'23 Findings) 2023.

PDF Abstract BibTex Slides

Efficient Text-to-Code Retrieval with Cascaded Fast and Slow Transformer Models

Akhilesh Gotmare, Junnan Li, Shafiq Joty, and Steven Hoi. In ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering (ESEC/FSE 2023) 2023.

PDF Abstract BibTex Slides

Akhilesh Gotmare, Junnan Li, Shafiq Joty, and Steven Hoi. In ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering (ESEC/FSE 2023) 2023.

PDF Abstract BibTex Slides

RAP-Gen: Retrieval-Augmented Patch Generation with CodeT5 for Automatic Program Repair

Weishi Wang, Yue Wang, Shafiq Joty, and Steven Hoi. In ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering (ESEC/FSE 2023) 2023.

PDF Abstract BibTex Slides

Weishi Wang, Yue Wang, Shafiq Joty, and Steven Hoi. In ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering (ESEC/FSE 2023) 2023.

PDF Abstract BibTex Slides

Long Sequence Modeling with XGen: A 7B LLM Trained on 8K Input Sequence Length

Erik Nijkamp*, Tian Xie*, Hiroaki Hayashi*, Bo Pang*, Congying Xia*, Chen Xing, Jesse Vig, Semih Yavuz, Philippe Laban, Ben Krause, Senthil Purushwalkam, Tong Niu, Wojciech Kryscinski, Lidiya Murakhovs'ka, Prafulla Choubey, Alex Fabbri, Ye Liu, Rui Meng, Lifu Tu, Meghana Bhat, Chien-Sheng Wu, Silvio Savarese, Yingbo Zhou, Shafiq Joty+, and Caiming Xiong+. In 2023.

PDF Abstract BibTex Slides

Erik Nijkamp*, Tian Xie*, Hiroaki Hayashi*, Bo Pang*, Congying Xia*, Chen Xing, Jesse Vig, Semih Yavuz, Philippe Laban, Ben Krause, Senthil Purushwalkam, Tong Niu, Wojciech Kryscinski, Lidiya Murakhovs'ka, Prafulla Choubey, Alex Fabbri, Ye Liu, Rui Meng, Lifu Tu, Meghana Bhat, Chien-Sheng Wu, Silvio Savarese, Yingbo Zhou, Shafiq Joty+, and Caiming Xiong+. In 2023.

PDF Abstract BibTex Slides

NLP+Vis: NLP Meets Visualization

Shafiq Joty, Enamul Hoque, and Jesse Vig. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing: Tutorial Abstracts (EMNLP'23 Tutorial) , pages 1-6, 2023.

PDF Abstract BibTex Slides

Shafiq Joty, Enamul Hoque, and Jesse Vig. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing: Tutorial Abstracts (EMNLP'23 Tutorial) , pages 1-6, 2023.

PDF Abstract BibTex Slides

2022

SleepQA: A Health Coaching Dataset on Sleep for Extractive Question Answering

Iva Bojic, Qi Ong, Megh Thakkar, Esha Kamran, Irving Shua, Rei Pang, Jessica Chen, Vaaruni Nayak, Shafiq Joty, and Josip Car. In 2022 Machine Learning for Health (Proceedings Track) (ML4H@NeurIPS'22) 2022.

PDF Abstract BibTex Slides

Iva Bojic, Qi Ong, Megh Thakkar, Esha Kamran, Irving Shua, Rei Pang, Jessica Chen, Vaaruni Nayak, Shafiq Joty, and Josip Car. In 2022 Machine Learning for Health (Proceedings Track) (ML4H@NeurIPS'22) 2022.

PDF Abstract BibTex Slides

Towards Summary Candidates Fusion

Mathieu Ravaut, Shafiq Joty, and Nancy Chen. In the 2022 Conference on Empirical Methods in Natural Language Processing (EMNLP'22) 2022.

PDF Abstract BibTex Slides

Mathieu Ravaut, Shafiq Joty, and Nancy Chen. In the 2022 Conference on Empirical Methods in Natural Language Processing (EMNLP'22) 2022.

PDF Abstract BibTex Slides

Learning Label Modular Prompts for Text Classification in the Wild

Hailin Chen, Amrita Saha, Shafiq Joty, and Steven Hoi. In the 2022 Conference on Empirical Methods in Natural Language Processing (EMNLP'22) 2022.

Abstract BibTex Slides

Hailin Chen, Amrita Saha, Shafiq Joty, and Steven Hoi. In the 2022 Conference on Empirical Methods in Natural Language Processing (EMNLP'22) 2022.

Abstract BibTex Slides

OpenCQA: Open-ended Question Answering with Charts

Shankar Kantharaj, Xuan Long, Rixie Tiffany, Jia Qing, Enamul Hoque, and Shafiq Joty. In the 2022 Conference on Empirical Methods in Natural Language Processing (EMNLP'22) 2022.

PDF Abstract BibTex Slides

Shankar Kantharaj, Xuan Long, Rixie Tiffany, Jia Qing, Enamul Hoque, and Shafiq Joty. In the 2022 Conference on Empirical Methods in Natural Language Processing (EMNLP'22) 2022.

PDF Abstract BibTex Slides

Enhancing Multilingual Language Model with Massive Multilingual Knowledge Triples

Linlin Liu, Xin Li, Ruidan He, Lidong Bing, Shafiq Joty, and Luo Si. In the 2022 Conference on Empirical Methods in Natural Language Processing (EMNLP'22) 2022.

PDF Abstract BibTex Slides

Linlin Liu, Xin Li, Ruidan He, Lidong Bing, Shafiq Joty, and Luo Si. In the 2022 Conference on Empirical Methods in Natural Language Processing (EMNLP'22) 2022.

PDF Abstract BibTex Slides

Alleviating Sparsity of Open Knowledge Graphs with Ternary Contrastive Learning

Qian Li, Shafiq Joty, Daling Wang, Shi Feng, and Yifei Zhang. In the 2022 Conference on Empirical Methods in Natural Language Processing (Findings) (EMNLP'22) 2022.

PDF Abstract BibTex Slides

Qian Li, Shafiq Joty, Daling Wang, Shi Feng, and Yifei Zhang. In the 2022 Conference on Empirical Methods in Natural Language Processing (Findings) (EMNLP'22) 2022.

PDF Abstract BibTex Slides

Data Selection Curriculum for Neural Machine Translation

Tasnim Mohiuddin, Philipp Koehn, Vishrav Chaudhary, James Cross, Shruti Bhosale, and Shafiq Joty. In the 2022 Conference on Empirical Methods in Natural Language Processing (Findings) (EMNLP'22) 2022.

PDF Abstract BibTex Slides

Tasnim Mohiuddin, Philipp Koehn, Vishrav Chaudhary, James Cross, Shruti Bhosale, and Shafiq Joty. In the 2022 Conference on Empirical Methods in Natural Language Processing (Findings) (EMNLP'22) 2022.

PDF Abstract BibTex Slides

BotSIM: An End-to-End Bot Simulation Framework for Commercial Task-Oriented Dialog Systems

Guangsen Wang, Samson Tan, Shafiq Joty, Gang Wu, Jimmy Au, and Steven Hoi. In the 2022 Conference on Empirical Methods in Natural Language Processing (demo) (EMNLP'22) 2022.

PDF Abstract BibTex Slides

Guangsen Wang, Samson Tan, Shafiq Joty, Gang Wu, Jimmy Au, and Steven Hoi. In the 2022 Conference on Empirical Methods in Natural Language Processing (demo) (EMNLP'22) 2022.

PDF Abstract BibTex Slides

Refining Low-Resource Unsupervised Translation by Language Disentanglement of Multilingual Translation Model

Xuan-Phi Nguyen, Shafiq Joty, Wu Kui, and Ai Ti. In 2022 Conference on Neural Information Processing Systems (NeurIPS'22) 2022.

PDF Abstract BibTex Slides

Xuan-Phi Nguyen, Shafiq Joty, Wu Kui, and Ai Ti. In 2022 Conference on Neural Information Processing Systems (NeurIPS'22) 2022.

PDF Abstract BibTex Slides

GradMask: Gradient-Guided Token Masking for Textual Adversarial Example Detection

Han-Cheol Moon, Shafiq Joty, and Xu Chi. In 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining (SIGKDD'22) 2022.

PDF Abstract BibTex Slides

Han-Cheol Moon, Shafiq Joty, and Xu Chi. In 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining (SIGKDD'22) 2022.

PDF Abstract BibTex Slides

Rethinking Self-Supervision Objectives for Generalizable Coherence Modeling

Prathyusha Jwalapuram, Shafiq Joty, and Xiang Lin. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (ACL'22) 2022.

PDF Abstract BibTex Slides

Prathyusha Jwalapuram, Shafiq Joty, and Xiang Lin. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (ACL'22) 2022.

PDF Abstract BibTex Slides

Continual Few-shot Relation Learning via Embedding Space Regularization and Data Augmentation

Chengwei Qin, and Shafiq Joty. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (ACL'22) 2022.

PDF Abstract BibTex Slides

Chengwei Qin, and Shafiq Joty. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (ACL'22) 2022.

PDF Abstract BibTex Slides

SummaReranker: A Multi-Task Mixture-of-Experts Re-Ranking Framework for Abstractive Summarization

Mathieu Ravaut, Shafiq Joty, and Nancy Chen. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (ACL'22) 2022.

PDF Abstract BibTex Slides

Mathieu Ravaut, Shafiq Joty, and Nancy Chen. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (ACL'22) 2022.

PDF Abstract BibTex Slides

Chart-to-Text: A Large-Scale Benchmark for Chart Summarization

Shankar Kantharaj, Rixie Leong, Xiang Lin, Ahmed Masry, Megh Thakkar, Enamul Hoque, and Shafiq Joty. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (ACL'22) 2022.

PDF Abstract BibTex Slides

Shankar Kantharaj, Rixie Leong, Xiang Lin, Ahmed Masry, Megh Thakkar, Enamul Hoque, and Shafiq Joty. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (ACL'22) 2022.

PDF Abstract BibTex Slides

GlobalWoZ: Globalizing MultiWoZ to Develop Multilingual Task-Oriented Dialogue Systems

Bosheng Ding, Junjie Hu, Lidong Bing, Mahani Aljunied, Shafiq Joty, Luo Si, and Chunyan Miao. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (ACL'22) 2022.

PDF Abstract BibTex Slides

Bosheng Ding, Junjie Hu, Lidong Bing, Mahani Aljunied, Shafiq Joty, Luo Si, and Chunyan Miao. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (ACL'22) 2022.

PDF Abstract BibTex Slides

ChartQA: A Benchmark for Question Answering about Charts with Visual and Logical Reasoning

Ahmed Masry, Do Xuan, Jia Qing, Shafiq Joty, and Enamul Hoque. In Findings of the 60th Annual Meeting of the Association for Computational Linguistics (ACL'22 Findings) 2022.

PDF Abstract BibTex Slides

Ahmed Masry, Do Xuan, Jia Qing, Shafiq Joty, and Enamul Hoque. In Findings of the 60th Annual Meeting of the Association for Computational Linguistics (ACL'22 Findings) 2022.

PDF Abstract BibTex Slides

LFPT5: A Unified Framework for Lifelong Few-shot Language Learning Based on Prompt Tuning of T5

Chengwei Qin, and Shafiq Joty. In International Conference on Learning Representations (ICLR-22) 2022.

PDF Abstract BibTex Slides

Chengwei Qin, and Shafiq Joty. In International Conference on Learning Representations (ICLR-22) 2022.

PDF Abstract BibTex Slides

Contrastive Clustering to Mine Pseudo Parallel Data for Unsupervised Translation

Xuan-Phi Nguyen, Hongyu Gong, Yun Tang, Changhan Wang, Philipp Koehn, and Shafiq Joty. In International Conference on Learning Representations (ICLR-22) 2022.

PDF Abstract BibTex Slides

Xuan-Phi Nguyen, Hongyu Gong, Yun Tang, Changhan Wang, Philipp Koehn, and Shafiq Joty. In International Conference on Learning Representations (ICLR-22) 2022.

PDF Abstract BibTex Slides

Weakly Supervised Neuro-Symbolic Module Networks for Numerical Reasoning

Amrita Saha, Shafiq Joty, and Steven Hoi. In Thirty-Sixth AAAI Conference on Artificial Intelligence (AAAI'22) 2022.

PDF Abstract BibTex Slides

Amrita Saha, Shafiq Joty, and Steven Hoi. In Thirty-Sixth AAAI Conference on Artificial Intelligence (AAAI'22) 2022.

PDF Abstract BibTex Slides

CoHS-CQG: Context and History Selection for Conversational Question Generation

Xuan Long, Bowei Zou, Liangming Pan, Nancy Chen, Shafiq Joty, and Ai Ti. In Proceedings of the 29th International Conference on Computational Linguistics (COLING'22) , pages xx-xx, 2022.

PDF Abstract BibTex Slides

Xuan Long, Bowei Zou, Liangming Pan, Nancy Chen, Shafiq Joty, and Ai Ti. In Proceedings of the 29th International Conference on Computational Linguistics (COLING'22) , pages xx-xx, 2022.

PDF Abstract BibTex Slides

Towards Multi-Sense Cross-Lingual Alignment of Contextual Embeddings

Linlin Liu, Thien Hai, Shafiq Joty, Lidong Bing, and Luo Si. In Proceedings of the 29th International Conference on Computational Linguistics (COLING'22) , pages xx-xx, 2022.

PDF Abstract BibTex Slides

Linlin Liu, Thien Hai, Shafiq Joty, Lidong Bing, and Luo Si. In Proceedings of the 29th International Conference on Computational Linguistics (COLING'22) , pages xx-xx, 2022.

PDF Abstract BibTex Slides

Unsupervised Cross-lingual Image Captioning

Jiahui Gao, Yi Zhou, Philip Yu, Shafiq Joty, and Jiuxiang Gu. In Thirty-Sixth AAAI Conference on Artificial Intelligence (AAAI'22) 2022.

PDF Abstract BibTex Slides

Jiahui Gao, Yi Zhou, Philip Yu, Shafiq Joty, and Jiuxiang Gu. In Thirty-Sixth AAAI Conference on Artificial Intelligence (AAAI'22) 2022.

PDF Abstract BibTex Slides

LANTERN: Boredom-conscious Natural Language Description Generation of Query Execution Plans for Database Education

Peng Chen, Hui Li, Sourav Bhowmick, Shafiq Joty, and Weiguo Wang. In Proceedings of 2022 ACM SIGMOD International Conference on Management of Data (Demo) (SIGMOD'22 (Demo)) , pages x - x, 2022.

PDF Abstract BibTex Slides

Peng Chen, Hui Li, Sourav Bhowmick, Shafiq Joty, and Weiguo Wang. In Proceedings of 2022 ACM SIGMOD International Conference on Management of Data (Demo) (SIGMOD'22 (Demo)) , pages x - x, 2022.

PDF Abstract BibTex Slides

PIANO: Influence Maximization Meets Deep Reinforcement Learning

Hui Li, Mengting Xu, Sourav Bhowmick, Shafiq Joty, Changsheng Sun, and Jiangtao Cui. In IEEE Transactions on Computational Social Systems (IEEE TCSS) 2022.

PDF Abstract BibTex

Hui Li, Mengting Xu, Sourav Bhowmick, Shafiq Joty, Changsheng Sun, and Jiangtao Cui. In IEEE Transactions on Computational Social Systems (IEEE TCSS) 2022.

PDF Abstract BibTex

NLP4Vis: Natural Language Processing for Information Visualization

Shafiq Joty, and Enamul Hoque. In Proceedings of the 2022 IEEE Vis Conference (IEEE Vis'22) 2022.

PDF Abstract BibTex Slides

Shafiq Joty, and Enamul Hoque. In Proceedings of the 2022 IEEE Vis Conference (IEEE Vis'22) 2022.

PDF Abstract BibTex Slides

2021

Align before Fuse: Vision and Language Representation Learning with Momentum Distillation

Junnan Li, Ramprasaath R., Akhilesh Deepak, Shafiq Joty, Caiming Xiong, and Steven Hoi. In 2021 Conference on Neural Information Processing Systems (NeurIPS'21 (spotlight ~3%)) 2021.

PDF Abstract BibTex Slides

Junnan Li, Ramprasaath R., Akhilesh Deepak, Shafiq Joty, Caiming Xiong, and Steven Hoi. In 2021 Conference on Neural Information Processing Systems (NeurIPS'21 (spotlight ~3%)) 2021.

PDF Abstract BibTex Slides

Straight to the Gradient: Learning to Use Novel Tokens for Neural Text Generation

Xiang Lin, Simeng Han, and Shafiq Joty. In Thirty-eighth International Conference on Machine Learning (ICML'21 (as long talk ~3%)) 2021.

PDF Abstract BibTex Slides

Xiang Lin, Simeng Han, and Shafiq Joty. In Thirty-eighth International Conference on Machine Learning (ICML'21 (as long talk ~3%)) 2021.

PDF Abstract BibTex Slides

Cross-model Back-translated Distillation for Unsupervised Machine Translation

Xuan-Phi Nguyen, Shafiq Joty, Thanh-Tung Nguyen, Wu Kui, and Ai Ti. In Thirty-eighth International Conference on Machine Learning (ICML'21) 2021.

PDF Abstract BibTex Slides

Xuan-Phi Nguyen, Shafiq Joty, Thanh-Tung Nguyen, Wu Kui, and Ai Ti. In Thirty-eighth International Conference on Machine Learning (ICML'21) 2021.

PDF Abstract BibTex Slides

CodeT5: Identifier-aware Unified Pre-trained Encoder-Decoder Models for Code Understanding and Generation

Yue Wang, Weishi Wang, Shafiq Joty, and Steven Hoi. In the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP'21) 2021.

PDF Abstract BibTex Slides

Yue Wang, Weishi Wang, Shafiq Joty, and Steven Hoi. In the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP'21) 2021.

PDF Abstract BibTex Slides

Effective Fine-tuning Methods for Cross-lingual Adaptation

Tao Yu, and Shafiq Joty. In the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP'21) 2021.

PDF Abstract BibTex Slides

Tao Yu, and Shafiq Joty. In the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP'21) 2021.

PDF Abstract BibTex Slides

A Unified Speaker Adaptation Approach for ASR

Yingzhu Zhao, Chongjia Ni, Cheung-Chi LEUNG, Shafiq Joty, Eng Siong, and Bin Ma. In the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP'21) 2021.

PDF Abstract BibTex Slides

Yingzhu Zhao, Chongjia Ni, Cheung-Chi LEUNG, Shafiq Joty, Eng Siong, and Bin Ma. In the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP'21) 2021.

PDF Abstract BibTex Slides

GeDi: Generative Discriminator Guided Sequence Generation

Ben Krause, Akhilesh Deepak, Bryan McCann, Nitish Shirish, Shafiq Joty, Richard Socher, and Nazneen Fatema. In the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP'21 Findings) 2021.

PDF Abstract BibTex Slides

Ben Krause, Akhilesh Deepak, Bryan McCann, Nitish Shirish, Shafiq Joty, Richard Socher, and Nazneen Fatema. In the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP'21 Findings) 2021.

PDF Abstract BibTex Slides

UXLA: A Robust Unsupervised Data Augmentation Framework for Cross-Lingual NLP

M Saiful, Tasnim Mohiuddin, and Shafiq Joty. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics (ACL'21) , pages 1978–-1992, 2021.

Abstract BibTex Slides

M Saiful, Tasnim Mohiuddin, and Shafiq Joty. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics (ACL'21) , pages 1978–-1992, 2021.

Abstract BibTex Slides

A Conditional Splitting Framework for Efficient Constituency Parsing

Thanh-Tung Nguyen, Xuan-Phi Nguyen, Shafiq Joty, and Xiaoli Li. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics (ACL'21) , pages xx–-xx, 2021.

PDF Abstract BibTex Slides

Thanh-Tung Nguyen, Xuan-Phi Nguyen, Shafiq Joty, and Xiaoli Li. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics (ACL'21) , pages xx–-xx, 2021.

PDF Abstract BibTex Slides

Reliability Testing for Natural Language Processing Systems

Samson Tan, Shafiq Joty, Kathy Baxter, Araz Taeihagh, Gregory A., and Min-Yen Kan. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics (ACL'21) , pages 4153–4169, 2021.

PDF Abstract BibTex Slides

Samson Tan, Shafiq Joty, Kathy Baxter, Araz Taeihagh, Gregory A., and Min-Yen Kan. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics (ACL'21) , pages 4153–4169, 2021.

PDF Abstract BibTex Slides

MulDA: A Multilingual Data Augmentation Framework for Low-Resource Cross-Lingual NER

Linlin Liu, Bosheng Ding, Lidong Bing, Shafiq Joty, Luo Si, and Chunyan Miao. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics (ACL'21) , pages xx–-xx, 2021.

PDF Abstract BibTex Slides

Linlin Liu, Bosheng Ding, Lidong Bing, Shafiq Joty, Luo Si, and Chunyan Miao. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics (ACL'21) , pages xx–-xx, 2021.

PDF Abstract BibTex Slides

AugVic: Exploiting BiText Vicinity for Low-Resource NMT

Tasnim Mohiuddin, M Saiful, and Shafiq Joty. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics (ACL'21 Findings) , pages xx–-xx, 2021.

PDF Abstract BibTex Slides

Tasnim Mohiuddin, M Saiful, and Shafiq Joty. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics (ACL'21 Findings) , pages xx–-xx, 2021.

PDF Abstract BibTex Slides

Code-Mixing on Sesame Street: Dawn of the Adversarial Polyglots

Samson Tan, and Shafiq Joty. In Proceedings of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL'21) , pages xx–-xx, 2021.

PDF Abstract BibTex Slides

Samson Tan, and Shafiq Joty. In Proceedings of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL'21) , pages xx–-xx, 2021.

PDF Abstract BibTex Slides

RST Parsing from Scratch

Thanh-Tung Nguyen, Xuan-Phi Nguyen, Shafiq Joty, and Xiaoli Li. In Proceedings of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL'21) , pages xx–-xx, 2021.

PDF Abstract BibTex Slides

Thanh-Tung Nguyen, Xuan-Phi Nguyen, Shafiq Joty, and Xiaoli Li. In Proceedings of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL'21) , pages xx–-xx, 2021.

PDF Abstract BibTex Slides

Improving Zero and Few-Shot Abstractive Summarization with Intermediate Fine-tuning and Data Augmentation

Alexander Fabbri, Simeng Han, Haoyuan Li, Haoran Li, Marjan Ghazvininejad, Shafiq Joty, Dragomir Radev, and Yashar Mehdad. In Proceedings of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL'21) , pages xx–-xx, 2021.

PDF Abstract BibTex Slides

Alexander Fabbri, Simeng Han, Haoyuan Li, Haoran Li, Marjan Ghazvininejad, Shafiq Joty, Dragomir Radev, and Yashar Mehdad. In Proceedings of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL'21) , pages xx–-xx, 2021.

PDF Abstract BibTex Slides

Addressing the Vulnerability of NMT in Input Perturbations

Weiwen Xu, AiTi Aw, Yang Ding, Kui Wu, and Shafiq Joty. In Proceedings of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Industry Track) (NAACL'21) , pages xx–-xx, 2021.

Abstract BibTex Slides

Weiwen Xu, AiTi Aw, Yang Ding, Kui Wu, and Shafiq Joty. In Proceedings of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Industry Track) (NAACL'21) , pages xx–-xx, 2021.

Abstract BibTex Slides

Rethinking Coherence Modeling: Synthetic vs. Downstream Tasks

Tasnim Mohiuddin*, Prathyusha Jwalapuram*, Xiang Lin*, and Shafiq Joty*. In Proceedings of the European Chapter of the ACL (EACL'21) , pages x–-x, 2021.

PDF Abstract BibTex Slides

Tasnim Mohiuddin*, Prathyusha Jwalapuram*, Xiang Lin*, and Shafiq Joty*. In Proceedings of the European Chapter of the ACL (EACL'21) , pages x–-x, 2021.

PDF Abstract BibTex Slides

Span-Level Emotion Cause Analysis by BERT-based Graph Attention Network

Xiangju Li, Wei Gao, Shi Feng, Wang Daling, and Shafiq Joty. In Proceedings of The 30th ACM International Conference on Information and Knowledge Management (CIKM'21 (short paper)) , pages xx-xx, 2021.

Abstract BibTex Slides

Xiangju Li, Wei Gao, Shi Feng, Wang Daling, and Shafiq Joty. In Proceedings of The 30th ACM International Conference on Information and Knowledge Management (CIKM'21 (short paper)) , pages xx-xx, 2021.

Abstract BibTex Slides

Span-level Emotion Cause Analysis with Neural Sequence Tagging

Xiangju Li, Wei Gao, Shi Feng, Wang Daling, and Shafiq Joty. In Proceedings of The 30th ACM International Conference on Information and Knowledge Management (CIKM'21 (short paper)) , pages xx-xx, 2021.

Abstract BibTex Slides

Xiangju Li, Wei Gao, Shi Feng, Wang Daling, and Shafiq Joty. In Proceedings of The 30th ACM International Conference on Information and Knowledge Management (CIKM'21 (short paper)) , pages xx-xx, 2021.

Abstract BibTex Slides

Preventing Early Endpointing for Online Automatic Speech Recognition

Yingzhu Zhao, Chongjia Ni, Cheung-Chi Leung, Shafiq Joty, Eng Siong, and Bin Ma. In International Conference on Acoustics, Speech, and Signal Processing (ICASSP'21) , pages xx - xx, 2021.

PDF Abstract BibTex Slides

Yingzhu Zhao, Chongjia Ni, Cheung-Chi Leung, Shafiq Joty, Eng Siong, and Bin Ma. In International Conference on Acoustics, Speech, and Signal Processing (ICASSP'21) , pages xx - xx, 2021.

PDF Abstract BibTex Slides

Towards Enhancing Database Education: Natural Language Generation Meets Query Execution Plans

Weiguo Wang, Sourav S, Hui Li, Shafiq Joty, and Siyuan Liu. In Proceedings of 2021 ACM SIGMOD International Conference on Management of Data (SIGMOD'21) , pages x - x, 2021.

Abstract BibTex Slides

Weiguo Wang, Sourav S, Hui Li, Shafiq Joty, and Siyuan Liu. In Proceedings of 2021 ACM SIGMOD International Conference on Management of Data (SIGMOD'21) , pages x - x, 2021.

Abstract BibTex Slides

2020

Data Diversification: An Elegant Strategy for Neural Machine Translation

Xuan-Phi Nguyen, Shafiq Joty, Wu Kui, and Ai Ti. In 2020 Conference on Neural Information Processing Systems (NeurIPS'20) 2020.

PDF Abstract BibTex Slides

Xuan-Phi Nguyen, Shafiq Joty, Wu Kui, and Ai Ti. In 2020 Conference on Neural Information Processing Systems (NeurIPS'20) 2020.

PDF Abstract BibTex Slides

Self-Supervised Relationship Probing

Jiuxiang Gu, Jason Kuen, Shafiq Joty, Jianfei Cai, Vlad Morariu, Handong Zhao, and Tong Sun. In 2020 Conference on Neural Information Processing Systems (NeurIPS'20) 2020.

PDF Abstract BibTex Slides

Jiuxiang Gu, Jason Kuen, Shafiq Joty, Jianfei Cai, Vlad Morariu, Handong Zhao, and Tong Sun. In 2020 Conference on Neural Information Processing Systems (NeurIPS'20) 2020.

PDF Abstract BibTex Slides

LNMap: Departures from Isomorphic Assumption in Bilingual Lexicon Induction Through Non-Linear Mapping in Latent Space

Tasnim Mohiuddin, M Saiful, and Shafiq Joty. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP'20) , pages 2712–-2723, 2020.

PDF Abstract BibTex Slides

Tasnim Mohiuddin, M Saiful, and Shafiq Joty. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP'20) , pages 2712–-2723, 2020.

PDF Abstract BibTex Slides

Pronoun-Targeted Finetuning for NMT with Hybrid Losses

Prathyusha Jwalapuram, Shafiq Joty, and Youlin Shen. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP'20) , pages 2267–2279, 2020.

PDF Abstract BibTex Slides

Prathyusha Jwalapuram, Shafiq Joty, and Youlin Shen. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP'20) , pages 2267–2279, 2020.

PDF Abstract BibTex Slides

Mind Your Inflections! Improving NLP for Non-Standard English with Base-Inflection Encoding

Samson Tan, Shafiq Joty, Lav R., and Min-Yen Kan. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP'20) , pages 5647–-5663, 2020.

PDF Abstract BibTex Slides

Samson Tan, Shafiq Joty, Lav R., and Min-Yen Kan. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP'20) , pages 5647–-5663, 2020.

PDF Abstract BibTex Slides

Response Selection for Multi-Party Conversations with Dynamic Topic Tracking

Weishi Wang, Shafiq Joty, and Steven Hoi. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP'20) , pages 6581–-6591, 2020.

PDF Abstract BibTex Slides